6.3. Topic Extraction in RSS-Feed Corpus#

Author: Johannes Maucher

Last update: 2018-11-16

In the notebook 01gensimDocModelSimple the concepts of dictionaries, document models, tf-idf and similarity have been described using an example of a samll document collection. Moreover, in notebook 02LatentSemanticIndexing LSI based topic extraction and document clustering have also been introduced by a small playground example.

The current notebook applies these concepts to a real corpus of RSS-Feeds, which has been generated and accessed in previous notebooks of this lecture:

6.3.1. Read documents from a corpus#

The contents of the RSS-Fedd corpus are imported by NLTK’s CategorizedPlaintextCorpusReader as already done in previous notebooks of this lecture:

#!pip install wordcloud

from nltk.corpus.reader import CategorizedPlaintextCorpusReader

from nltk.corpus import stopwords

stopwordlist=stopwords.words('english')

from wordcloud import WordCloud

rootDir="../Data/ENGLISH"

filepattern=r"(?!\.)[\w_]+(/RSS/FeedText/)[\w-]+/[\w-]+\.txt"

#filepattern=r"(?!\.)[\w_]+(/RSS/FullText/)[\w-]+/[\w-]+\.txt"

catpattern=r"([\w_]+)/.*"

rssreader=CategorizedPlaintextCorpusReader(rootDir,filepattern,cat_pattern=catpattern)

singleDoc=rssreader.paras(categories="TECH")[0]

print("The first paragraph:\n",singleDoc)

print("Number of paragraphs in the corpus: ",len(rssreader.paras(categories="TECH")))

The first paragraph:

[['Radar', 'trends', 'to', 'watch', ':', 'May', '2022', 'April', 'was', 'the', 'month', 'for', 'large', 'language', 'models', '.'], ['There', 'was', 'one', 'announcement', 'after', 'another', ';', 'most', 'new', 'models', 'were', 'larger', 'than', 'the', 'previous', 'ones', ',', 'several', 'claimed', 'to', 'be', 'significantly', 'more', 'energy', 'efficient', '.'], ['The', 'largest', '(', 'as', 'far', 'as', 'we', 'know', ')', 'is', 'Google', '’', 's', 'GLAM', ',', 'with', '1', '.', '2', 'trillion', 'parameters', '–', 'but', 'requiring', 'significantly', 'less', 'energy', 'to', 'train', 'than', 'GPT', '-', '3', '.'], ['Chinchilla', 'has', '[…]']]

Number of paragraphs in the corpus: 40

techdocs=[[w.lower() for sent in singleDoc for w in sent if (len(w)>1 and w.lower() not in stopwordlist)] for singleDoc in rssreader.paras(categories="TECH")]

print("Number of documents in category Tech: ",len(techdocs))

Number of documents in category Tech: 40

generaldocs=[[w.lower() for sent in singleDoc for w in sent if (len(w)>1 and w.lower() not in stopwordlist)] for singleDoc in rssreader.paras(categories="GENERAL")]

print("Number of documents in category General: ",len(generaldocs))

Number of documents in category General: 40

alldocs=techdocs+generaldocs

print("Total number of documents: ",len(alldocs))

Total number of documents: 80

6.3.1.1. Remove duplicate news#

def removeDuplicates(nestedlist):

listOfTuples=[tuple(liste) for liste in nestedlist]

uniqueListOfTuples=list(set(listOfTuples))

return [list(menge) for menge in uniqueListOfTuples]

techdocs=removeDuplicates(techdocs)

generaldocs=removeDuplicates(generaldocs)

alldocs=removeDuplicates(alldocs)

print("Number of unique documents in category Tech: ",len(techdocs))

print("Number of unique documents in category General: ",len(generaldocs))

print("Total number of unique documents: ",len(alldocs))

Number of unique documents in category Tech: 20

Number of unique documents in category General: 18

Total number of unique documents: 38

alltechString=" ".join([w for doc in techdocs for w in doc])

print(len(alltechString))

allgeneralString=" ".join([w for doc in generaldocs for w in doc])

print(len(allgeneralString))

7364

2146

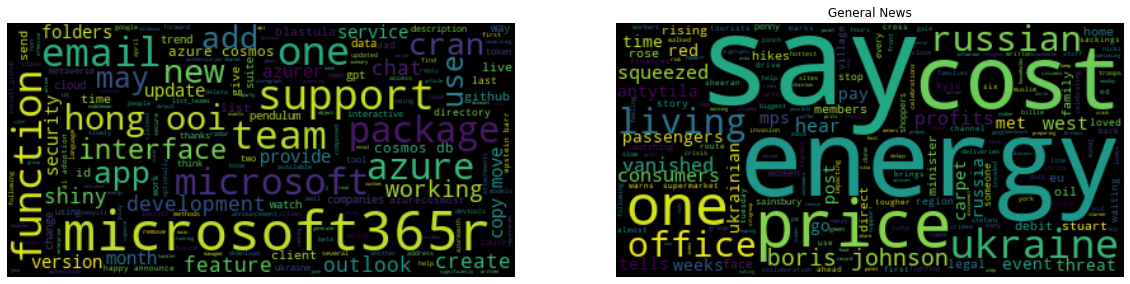

wordcloudTech=WordCloud().generate(alltechString)

wordcloudGeneral=WordCloud().generate(allgeneralString)

%matplotlib inline

import matplotlib.pyplot as plt

plt.figure(figsize=(20,18))

plt.title("Tech News")

plt.subplot(1,2,1)

plt.imshow(wordcloudTech, interpolation='bilinear')

plt.axis("off")

plt.subplot(1,2,2)

plt.imshow(wordcloudGeneral, interpolation='bilinear')

plt.title("General News")

plt.axis("off")

(-0.5, 399.5, 199.5, -0.5)

6.3.2. Gensim-representation of imported RSS-feeds#

from gensim import corpora, models, similarities

dictionary = corpora.Dictionary(alldocs)

dictionary.save('../Data/feedwordsDE.dict') # store the dictionary, for future reference

print(len(dictionary))

820

import random

first_doc = techdocs[0]

print(first_doc)

first_vec = dictionary.doc2bow(first_doc)

print(f"Sparse BoW representation of single document: {first_vec}")

for word in random.choices(first_doc, k=3):

print(f"Index of word {word} is {dictionary.token2id[word]}")

['recommendations', 'us', 'live', 'household', 'communal', 'device', 'like', 'amazon', 'echo', 'google', 'home', 'hub', 'probably', 'use', 'play', 'music', 'live', 'people', 'may', 'find', 'time', 'spotify', 'pandora', 'algorithm', 'seems', 'know', 'well', 'find', 'songs', 'creeping', '[…]']

Sparse BoW representation of single document: [(0, 1), (1, 1), (2, 1), (3, 1), (4, 1), (5, 1), (6, 1), (7, 2), (8, 1), (9, 1), (10, 1), (11, 1), (12, 1), (13, 1), (14, 2), (15, 1), (16, 1), (17, 1), (18, 1), (19, 1), (20, 1), (21, 1), (22, 1), (23, 1), (24, 1), (25, 1), (26, 1), (27, 1), (28, 1)]

Index of word echo is 6

Index of word device is 5

Index of word spotify is 24

Sparse BoW representation of entire tech-corpus and entire general-news-corpus:

techcorpus = [dictionary.doc2bow(doc) for doc in techdocs]

generalcorpus = [dictionary.doc2bow(doc) for doc in generaldocs]

print(generaldocs[:3])

[['women', 'waiting', 'hear', 'vanished', 'loved', 'ones', 'stop', 'village', 'region', 'west', 'kyiv', 'hear', 'story', 'someone', 'vanished'], ['sainsbury', 'says', 'shoppers', 'watching', 'every', 'penny', 'supermarket', 'profits', 'jump', 'warns', 'tougher', 'times', 'ahead', 'consumers', 'finances', 'squeezed'], ['brings', 'back', 'passengers', 'cross', 'channel', 'route', 'sackings', 'tuesday', 'marks', 'first', 'time', 'drive', 'passengers', 'tourists', 'use', 'almost', 'six', 'weeks']]

6.3.3. Find similiar documents#

index = similarities.SparseMatrixSimilarity(techcorpus, num_features=len(dictionary))

sims = index[first_vec]

#print(list(enumerate(sims)))

simlist = sims.argsort()

print(simlist)

mostSimIdx=simlist[-2]

[ 2 7 18 4 11 5 9 8 6 12 16 19 17 3 14 13 10 15 1 0]

print("Refernce document is:\n",first_doc)

print("Most similar document:\n",techdocs[mostSimIdx])

Refernce document is:

['microsoft365r', 'outlook', 'support', 'cran', 'hong', 'ooi', 'happy', 'announce', 'microsoft365r', 'cran', 'outlook', 'email', 'support', 'quick', 'summary', 'new', 'features', 'send', 'reply', 'forward', 'emails', 'optionally', 'composed', 'blastula', 'emayili', 'copy', 'move', 'emails', 'folders', 'create', 'delete', 'copy', 'move', 'folders', 'add', 'remove', 'download', 'attachments', 'sample', 'write', 'email', 'using', 'blastula', 'library', 'microsoft365r', '1st', 'one', 'personal', 'microsoft', 'account', '2nd', 'work', 'school', 'account', 'outl']

Most similar document:

['outlook', 'client', 'support', 'microsoft365r', 'available', 'beta', 'test', 'hong', 'ooi', 'announcement', 'beta', 'outlook', 'email', 'client', 'part', 'microsoft365r', 'package', 'install', 'github', 'repository', 'devtools', '::', 'install_github', '("', 'azure', 'microsoft365r', '")', 'client', 'provides', 'following', 'features', 'send', 'reply', 'forward', 'emails', 'optionally', 'composed', 'blastula', 'emayili', 'copy', 'move', 'emails', 'folders', 'create', 'delete', 'copy', 'move', 'folders', 'add', 'remove', 'download', 'attachments', 'plan', 'submit', 'cran', 'sometime', 'next', 'month', 'period', 'public', 'testing', 'please', 'give', 'try', 'give', 'feedback', 'either', 'via', 'email', 'opening', 'issue', '...']

6.3.4. Find topics by Latent Semantic Indexing (LSI)#

6.3.4.1. Generate tf-idf model of corpus#

tfidf = models.TfidfModel(techcorpus)

corpus_tfidf = tfidf[techcorpus]

print("Display TF-IDF- Model of first 2 documents of the corpus")

for doc in corpus_tfidf[:2]:

print(doc)

Display TF-IDF- Model of first 2 documents of the corpus

[(13, 0.055648773453563255), (19, 0.15879721214500278), (20, 0.15879721214500278), (21, 0.31759442429000556), (22, 0.08531278174327886), (23, 0.10056217885820973), (24, 0.12205499694414082), (25, 0.24410999388828164), (26, 0.12205499694414082), (27, 0.24410999388828164), (28, 0.1469688608034478), (29, 0.08531278174327886), (30, 0.12205499694414082), (31, 0.12205499694414082), (32, 0.17062556348655772), (33, 0.24410999388828164), (34, 0.12205499694414082), (35, 0.08531278174327886), (36, 0.24410999388828164), (37, 0.12205499694414082), (38, 0.10056217885820973), (39, 0.04857056654241692), (40, 0.15879721214500278), (41, 0.08531278174327886), (42, 0.19145989097204336), (43, 0.24410999388828164), (44, 0.055648773453563255), (45, 0.04857056654241692), (46, 0.12205499694414082), (47, 0.15879721214500278), (48, 0.20112435771641946), (49, 0.15879721214500278), (50, 0.15879721214500278), (51, 0.12205499694414082), (52, 0.12205499694414082), (53, 0.15879721214500278), (54, 0.15879721214500278), (55, 0.10056217885820973), (56, 0.12205499694414082), (57, 0.11129754690712651), (58, 0.10056217885820973), (59, 0.12205499694414082), (60, 0.12205499694414082)]

[(22, 0.06757935386523892), (24, 0.09668419738474388), (25, 0.09668419738474388), (26, 0.09668419738474388), (27, 0.19336839476948775), (28, 0.058209687039009896), (29, 0.06757935386523892), (30, 0.09668419738474388), (31, 0.09668419738474388), (32, 0.13515870773047783), (33, 0.19336839476948775), (34, 0.09668419738474388), (35, 0.06757935386523892), (36, 0.19336839476948775), (37, 0.09668419738474388), (39, 0.03847451034573397), (42, 0.15166233545091412), (43, 0.19336839476948775), (45, 0.03847451034573397), (46, 0.09668419738474388), (48, 0.15931791067295262), (51, 0.09668419738474388), (52, 0.09668419738474388), (55, 0.07965895533647631), (57, 0.04408141519405023), (61, 0.12578904090424883), (62, 0.09668419738474388), (63, 0.058209687039009896), (64, 0.09668419738474388), (65, 0.09668419738474388), (66, 0.09668419738474388), (67, 0.058209687039009896), (68, 0.25157808180849767), (69, 0.37736712271274647), (70, 0.09668419738474388), (71, 0.12578904090424883), (72, 0.12578904090424883), (73, 0.09668419738474388), (74, 0.07965895533647631), (75, 0.25157808180849767), (76, 0.09668419738474388), (77, 0.09668419738474388), (78, 0.12578904090424883), (79, 0.07965895533647631), (80, 0.12578904090424883), (81, 0.12578904090424883), (82, 0.06757935386523892), (83, 0.12578904090424883), (84, 0.12578904090424883), (85, 0.12578904090424883), (86, 0.12578904090424883), (87, 0.09668419738474388), (88, 0.12578904090424883), (89, 0.12578904090424883), (90, 0.12578904090424883), (91, 0.12578904090424883), (92, 0.12578904090424883), (93, 0.12578904090424883), (94, 0.12578904090424883), (95, 0.12578904090424883)]

6.3.4.2. Generate LSI model from tf-idf model#

techdictionary = corpora.Dictionary(techdocs)

NumTopics=20

lsi = models.LsiModel(corpus_tfidf, id2word=dictionary, num_topics=NumTopics) # initialize an LSI transformation

corpus_lsi = lsi[corpus_tfidf]

Display first 10 topics:

lsi.print_topics(10)

[(0,

'-0.349*"microsoft365r" + -0.189*"microsoft" + -0.156*"outlook" + -0.155*"move" + -0.155*"emails" + -0.155*"folders" + -0.155*"copy" + -0.142*"teams" + -0.133*"365" + -0.132*"email"'),

(1,

'0.195*"move" + 0.195*"folders" + 0.195*"emails" + 0.195*"copy" + 0.174*"client" + -0.163*"app" + 0.150*"blastula" + 0.144*"outlook" + 0.137*"account" + -0.125*"shiny"'),

(2,

'-0.180*"gpt" + -0.179*"models" + -0.179*"significantly" + -0.179*"energy" + -0.144*"tool" + -0.144*"verbal" + -0.144*"descriptions" + -0.144*"surprising" + -0.133*"metaverse" + -0.128*"2022"'),

(3,

'-0.225*"packages" + -0.215*"azurer" + 0.188*"azure" + 0.159*"cosmos" + 0.159*"db" + -0.156*"update" + -0.126*"june" + -0.126*"caching" + 0.119*"azurecosmosr" + 0.114*"functions"'),

(4,

'-0.204*"teams" + -0.181*"team" + 0.145*"app" + 0.137*"metaverse" + 0.135*"azure" + -0.135*"()" + -0.133*"list_teams" + -0.126*"chats" + -0.116*"list" + 0.115*"cosmos"'),

(5,

'0.254*"metaverse" + 0.164*"could" + 0.163*"live" + 0.134*"barr" + 0.134*"cause" + 0.134*"epstein" + 0.133*"think" + 0.131*"get" + -0.129*"packages" + 0.127*"find"'),

(6,

'0.316*"ai" + 0.237*"adoption" + 0.200*"security" + 0.200*"companies" + 0.200*"data" + 0.181*"secure" + 0.181*"future" + 0.125*"ukraine" + 0.107*"like" + 0.101*"help"'),

(7,

'-0.249*"party" + -0.249*"middleman" + -0.246*"two" + -0.213*"ukraine" + -0.142*"cyber" + -0.142*"offensive" + -0.124*"building" + -0.124*"actually" + -0.124*"despite" + -0.124*"comes"'),

(8,

'0.305*"pendulum" + 0.305*"slowly" + 0.305*"swing" + 0.239*"way" + 0.153*"underneath" + 0.153*"oscillate" + 0.153*"watching" + 0.153*"pendulums" + 0.153*"cliche" + 0.153*"earth"'),

(9,

'-0.293*"barr" + -0.293*"cause" + -0.293*"epstein" + 0.226*"find" + -0.196*"ms" + 0.178*"live" + -0.147*"think" + -0.115*"app" + 0.113*"hub" + 0.113*"probably"')]

6.3.4.3. Determine the most relevant documents for a selected topic#

Generate a numpy array docTopic. The entry in row \(i\), column \(j\) of this array is the relevance value for topic \(j\) in document \(i\).

import numpy as np

numdocs= len(corpus_lsi)

docTopic=np.zeros((numdocs,NumTopics))

for d,doc in enumerate(corpus_lsi): # both bow->tfidf and tfidf->lsi transformations are actually executed here, on the fly

for t,top in enumerate(doc):

docTopic[d,t]=top[1]

print(docTopic.shape)

print(docTopic)

(20, 20)

[[-6.30607635e-01 5.36371130e-01 -5.55603925e-02 -1.10429913e-02

1.02211588e-01 -4.19538185e-02 5.23341721e-02 -2.01324536e-02

9.36476226e-03 1.48590022e-02 -5.64484933e-02 -1.76516387e-02

-2.52711931e-02 3.12620379e-02 3.97842969e-03 9.22752537e-03

-2.30506582e-02 -6.48237130e-02 -2.02715658e-01 -4.94701015e-01]

[-6.04091276e-01 5.73628893e-01 -6.18943219e-02 6.50059353e-02

1.36255030e-01 -6.08886307e-02 1.62551604e-02 -4.69463769e-02

1.80244057e-03 2.64716158e-02 -4.91570907e-02 4.11850780e-04

-1.38490360e-02 2.17868203e-02 -5.68861208e-02 -1.21444467e-03

1.17206225e-03 -1.12703328e-02 8.58949481e-02 5.08620690e-01]

[-3.03491346e-02 -3.01121930e-02 -6.77552212e-02 3.23638202e-02

-4.63767220e-02 1.53101006e-01 1.56397015e-01 -7.49283302e-01

8.67874972e-02 3.13360382e-02 1.88340293e-01 5.51809912e-01

4.37718308e-02 -1.52561947e-01 -5.61926302e-02 5.06158688e-02

-2.66355793e-02 -7.51413228e-03 2.26857889e-02 -1.96536220e-02]

[-2.38455142e-01 -2.19308458e-01 3.91593208e-02 -5.52749115e-01

8.52552324e-02 -3.03535230e-01 1.20765156e-01 -4.48972721e-02

-4.98519173e-02 1.09683911e-01 -3.41206046e-01 6.74118871e-02

1.00182191e-01 -2.17779384e-01 1.85474429e-01 2.48904436e-01

6.05801293e-03 4.01479785e-01 -1.39144820e-01 4.57341981e-02]

[-1.30514812e-01 -2.61599673e-01 -2.26025793e-02 3.51731394e-01

3.09718477e-01 -1.38122021e-01 -2.03790332e-01 2.26756102e-02

2.37883789e-02 2.09293409e-01 -4.34966679e-01 2.98409037e-01

-2.08772531e-01 -6.63282576e-02 7.43404012e-02 -4.95913075e-01

8.03070307e-02 -1.54263843e-02 -6.70225583e-02 -4.82225129e-03]

[-2.39291785e-02 -9.08826976e-02 -1.50914455e-01 1.84879449e-01

1.21793894e-01 -8.71784281e-02 6.09229394e-01 2.38374387e-01

1.17823122e-01 8.31075571e-02 4.36596145e-02 1.42232253e-01

6.09593569e-01 2.07261955e-01 4.14597348e-02 -1.56094605e-01

-4.61079434e-03 2.59587458e-03 -1.30098110e-02 5.16299877e-03]

[-6.00555645e-02 -4.24333870e-02 -5.37637107e-01 8.42846021e-02

-2.83060151e-01 -3.15977611e-01 -2.30785026e-01 2.72557924e-03

-4.79833413e-02 -1.04435348e-01 1.02944148e-01 7.97312448e-02

5.60029929e-03 9.24503801e-02 3.87596688e-01 -2.15310685e-02

-5.24117200e-01 -1.97832407e-02 -1.64142487e-02 2.01135662e-02]

[-5.20350369e-02 -1.27413098e-01 -1.70000161e-01 1.52500791e-01

-2.59232036e-01 1.88208007e-03 2.92959380e-01 -4.84237389e-01

-3.82058687e-02 2.65143039e-02 -3.73275357e-01 -5.49920209e-01

-7.65006791e-02 2.18507134e-01 -1.21632828e-01 -1.31537160e-01

-3.59551551e-02 9.40152611e-02 3.95207661e-02 -9.41403597e-03]

[-3.31438174e-02 -5.85697458e-02 -2.37501046e-01 -7.34280933e-02

1.38103118e-01 3.49474204e-01 1.25963065e-02 9.85750386e-02

-2.14843025e-01 -6.98404822e-01 -3.62263659e-01 1.47303665e-01

1.09220812e-01 -1.05286508e-01 -2.31339278e-01 -1.09470554e-03

-1.33637238e-01 -3.11444068e-02 -1.79309295e-02 6.94238150e-03]

[-1.61050673e-01 -2.91378078e-01 3.24281690e-03 4.59682332e-01

3.28382424e-01 -1.46496542e-01 -1.22444828e-01 -5.54832087e-02

9.10591673e-02 -1.30813831e-02 -1.11762204e-01 -1.33900871e-02

1.55657016e-02 2.04509065e-01 -5.91564921e-02 6.69807910e-01

-2.56235721e-04 -1.22461081e-01 -1.82093736e-04 -1.17298188e-02]

[-3.29249374e-01 -1.25962351e-01 5.96766984e-02 -1.05894085e-01

-3.82966304e-01 1.41928175e-01 5.42660489e-03 2.31757937e-01

1.26086962e-01 3.81256938e-02 -5.32890500e-02 3.62634554e-01

-2.47599249e-01 5.31280907e-01 -2.13788006e-01 3.97709499e-02

-5.12263062e-03 2.61484597e-01 1.68221955e-01 -3.40522935e-02]

[-3.25893490e-02 -8.76820895e-02 -2.27412859e-01 1.41942539e-01

6.05588332e-02 -1.16822246e-01 5.94238497e-01 2.00987313e-01

2.67688072e-02 -1.37257949e-01 1.87424278e-01 1.36874494e-02

-6.05406630e-01 -2.74189112e-01 4.97206939e-02 7.97070518e-02

4.99653184e-02 2.22867194e-02 9.69866279e-03 8.62316810e-03]

[-5.33905602e-02 -4.24298675e-02 -6.17203363e-01 -1.34125655e-02

-2.08692510e-01 -2.45048817e-01 -2.14462005e-01 2.33784858e-02

-1.35940941e-01 9.44141370e-03 9.69567755e-02 2.76592924e-02

1.23863952e-01 -6.73404319e-02 -2.27623925e-01 2.65831198e-02

6.01991599e-01 7.77353107e-03 -1.74550715e-03 -2.23350919e-02]

[-4.00844315e-01 -3.24949514e-01 9.11471660e-02 1.04395173e-01

2.31670203e-01 1.16353600e-02 -1.46794990e-01 -9.07407616e-02

-9.44173151e-03 -2.30344118e-01 4.97195570e-01 -1.91447431e-01

2.74699492e-02 7.53051304e-02 -1.39743044e-01 -2.12480301e-01

-4.06660410e-02 3.60845244e-01 -2.97923148e-01 4.70168334e-02]

[-4.45924921e-01 -3.05884540e-01 1.57109756e-01 2.12990044e-02

-4.84610759e-01 2.37641647e-01 6.68994379e-02 8.80260282e-02

-3.12489221e-02 6.06898283e-02 -5.06910900e-02 9.57178901e-03

3.55987544e-02 -1.19518735e-01 1.26107051e-01 5.05165707e-02

8.27138312e-02 -3.89276668e-01 -4.03182809e-01 1.29000337e-01]

[-5.81560226e-01 -2.45734286e-01 1.23075810e-01 1.54166545e-01

-1.14514682e-01 1.14518802e-01 -6.11618709e-02 5.62301732e-02

-7.11764977e-02 -7.41040091e-03 6.73110933e-02 -1.22910218e-01

1.74988079e-01 -3.68605996e-01 1.40620323e-01 -3.26573462e-02

1.16039205e-03 4.75057047e-02 5.48853241e-01 -1.17337520e-01]

[-5.44930230e-02 -5.21152589e-02 -3.05858855e-01 -1.76446419e-01

2.99841550e-01 5.42571242e-01 -2.17581122e-02 -6.70149820e-02

3.75545737e-02 2.77580366e-02 3.92058027e-02 -8.15804008e-02

-8.16578008e-02 2.71991030e-01 5.64157257e-01 2.15813842e-02

2.61723574e-01 1.77175147e-02 3.96573579e-02 1.52695717e-02]

[-2.40429387e-01 -3.00937333e-01 4.86646674e-03 -5.38328944e-01

2.71375403e-01 -3.04424712e-01 5.61104075e-02 -1.10447283e-01

5.53415322e-03 -6.77444081e-02 1.14757571e-01 -4.16385133e-02

-8.48757857e-02 2.19092393e-01 -1.42329942e-01 -1.51535623e-01

-4.75179702e-02 -4.80079755e-01 1.71547693e-01 -5.50752265e-03]

[-4.17200254e-02 -1.12661003e-01 -3.56508178e-01 -9.65223444e-02

1.83043297e-01 3.61581754e-01 -6.04764837e-03 1.27011215e-01

-2.72832045e-01 5.84164466e-01 6.55580141e-02 -5.56132826e-02

-2.06603903e-02 -1.24119099e-01 -3.47351088e-01 7.34893572e-02

-3.20369927e-01 -6.98185724e-04 -3.36751412e-02 2.06396280e-03]

[-2.87846724e-02 -3.94687297e-02 -2.39531313e-01 -1.17457712e-01

-4.48507424e-03 1.19559026e-01 -1.25202515e-01 6.12179492e-02

8.91104369e-01 -7.86395688e-03 -7.40060549e-02 -1.31303915e-01

3.36466165e-02 -2.16138123e-01 -1.46654709e-01 -1.72530754e-02

-7.55198851e-02 -8.55902389e-03 -1.88849640e-02 8.46486444e-03]]

Select an arbitrary topic-id and determine the documents, which have the highest relevance value for this topic:

topicId=7 #select an arbitrary topic-id

topicRelevance=docTopic[:,topicId]

docsoftopic= np.array(topicRelevance).argsort()

relevanceValue= np.sort(topicRelevance)

print(docsoftopic) #most relevant document for selected topic is at first position

print(relevanceValue) #highest relevance document/topic-relevance-value is at first position

[ 2 7 17 13 16 9 1 3 0 6 4 12 15 19 14 8 18 11 10 5]

[-0.7492833 -0.48423739 -0.11044728 -0.09074076 -0.06701498 -0.05548321

-0.04694638 -0.04489727 -0.02013245 0.00272558 0.02267561 0.02337849

0.05623017 0.06121795 0.08802603 0.09857504 0.12701122 0.20098731

0.23175794 0.23837439]

TOP=8

print("Selected Topic:\n",lsi.show_topic(topicId))

print("#"*50)

print("Docs with the highest negative value w.r.t the selected topic")

for idx in docsoftopic[:TOP]:

print("-"*20)

print(idx,"\n",techdocs[idx])

print("#"*50)

print("Docs with the highest positive value w.r.t the selected topic")

for idx in docsoftopic[-TOP:]:

print("-"*20)

print(idx,"\n",techdocs[idx])

Selected Topic:

[('party', -0.2488676878930281), ('middleman', -0.2488676878930281), ('two', -0.24596576983629964), ('ukraine', -0.2134234697117422), ('cyber', -0.1422823131411615), ('offensive', -0.1422823131411615), ('building', -0.12443384394651405), ('actually', -0.12443384394651405), ('despite', -0.12443384394651405), ('comes', -0.12443384394651405)]

##################################################

Docs with the highest negative value w.r.t the selected topic

--------------------

2

['building', 'better', 'middleman', 'comes', 'mind', 'hear', 'term', 'two', 'sided', 'market', '?”', 'maybe', 'imagine', 'party', 'needs', 'something', 'interact', 'party', 'provides', 'despite', 'number', 'two', 'name', 'actually', 'someone', 'else', 'involved', 'middleman', 'entity', 'sits', 'parties', 'make', '[…]']

--------------------

7

['day', 'kyiv', 'experience', 'working', 'ukraine', 'offensive', 'cyber', 'team', 'jeffrey', 'carrmarch', '22', '2022', 'russia', 'invaded', 'ukraine', 'february', '24th', 'working', 'two', 'offensive', 'cyber', 'operators', 'gurmo', 'main', 'intelligence', 'directorate', 'ministry', 'defense', 'ukraine', 'several', 'months', 'trying', 'help', 'raise', 'funds', 'expand', 'development', 'osint', 'open', '[…]']

--------------------

17

['azurer', 'update', 'new', 'may', 'june', 'hong', 'ooi', 'summary', 'updates', 'azurer', 'family', 'packages', 'may', 'june', '2021', 'azureauth', 'change', 'default', 'caching', 'behaviour', 'disable', 'cache', 'running', 'inside', 'shiny', 'update', 'shiny', 'vignette', 'clean', 'redirect', 'page', 'authenticating', 'thanks', 'tyler', 'littlefield', ').', 'add', 'create_azurer_dir', 'function', 'create', 'caching', 'directory', 'manually', 'useful', 'non', 'interactive', 'sessions', 'also', 'jupyter', 'notebooks', 'technically', 'interactive', 'sense', 'cannot', 'read', 'user', 'input', 'console', 'prompt', 'azuregraph', 'add', 'enhanced', 'support', 'paging', 'api', 'many', '...']

--------------------

13

['using', 'microsoft365r', 'shiny', 'hong', 'ooi', 'article', 'lightly', 'edited', 'version', 'microsoft365r', 'shiny', 'vignette', 'latest', 'microsoft365r', 'release', 'describe', 'incorporate', 'microsoft365r', 'interactive', 'authentication', 'azure', 'active', 'directory', 'aad', 'shiny', 'web', 'app', 'steps', 'involved', 'register', 'app', 'aad', 'use', 'app', 'id', 'authenticate', 'get', 'oauth', 'token', 'pass', 'token', 'microsoft365r', 'functions', 'app', 'registration', 'default', 'microsoft365r', 'app', 'registration', 'works', 'package', 'used', 'local', 'machine', 'support', 'running', 'remote', 'server', '...']

--------------------

16

['identity', 'problems', 'get', 'bigger', 'metaverse', 'hype', 'surrounding', 'metaverse', 'results', 'something', 'real', 'could', 'improve', 'way', 'live', 'work', 'play', 'could', 'create', 'hellworld', 'get', 'want', 'whatever', 'people', 'think', 'read', 'metaverse', 'originally', 'imagined', 'snow', 'crash', 'vision', '[…]']

--------------------

9

['azurecosmosr', 'interface', 'azure', 'cosmos', 'db', 'hong', 'ooi', 'last', 'week', 'announced', 'azurecosmosr', 'interface', 'azure', 'cosmos', 'db', 'fully', 'managed', 'nosql', 'database', 'service', 'azure', 'post', 'gives', 'short', 'rundown', 'main', 'features', 'azurecosmosr', 'explaining', 'azure', 'cosmos', 'db', 'tricky', 'excerpt', 'official', 'description', 'azure', 'cosmos', 'db', 'fully', 'managed', 'nosql', 'database', 'modern', 'app', 'development', 'single', 'digit', 'millisecond', 'response', 'times', 'automatic', 'instant', 'scalability', 'guarantee', 'speed', 'scale', 'business', 'continuity', 'assured', 'sla', 'backed', 'availability', 'enterprise', 'grade', 'security', 'app', 'development', 'faster', 'productive', 'thanks', 'turnkey', 'multi', 'region', '...']

--------------------

1

['outlook', 'client', 'support', 'microsoft365r', 'available', 'beta', 'test', 'hong', 'ooi', 'announcement', 'beta', 'outlook', 'email', 'client', 'part', 'microsoft365r', 'package', 'install', 'github', 'repository', 'devtools', '::', 'install_github', '("', 'azure', 'microsoft365r', '")', 'client', 'provides', 'following', 'features', 'send', 'reply', 'forward', 'emails', 'optionally', 'composed', 'blastula', 'emayili', 'copy', 'move', 'emails', 'folders', 'create', 'delete', 'copy', 'move', 'folders', 'add', 'remove', 'download', 'attachments', 'plan', 'submit', 'cran', 'sometime', 'next', 'month', 'period', 'public', 'testing', 'please', 'give', 'try', 'give', 'feedback', 'either', 'via', 'email', 'opening', 'issue', '...']

--------------------

3

['new', 'azurer', 'hong', 'ooi', 'update', 'happening', 'azurer', 'suite', 'packages', 'first', 'may', 'noticed', 'holiday', 'season', 'packages', 'updated', 'cran', 'change', 'maintainer', 'email', 'non', 'microsoft', 'address', 'left', 'microsoft', 'role', 'westpac', 'bank', 'australia', 'sad', 'leaving', 'intend', 'continue', 'maintaining', 'updating', 'packages', 'end', 'changes', 'recently', 'submitted', 'cran', 'shortly', 'azureauth', 'allows', 'obtaining', 'tokens', 'organizations', '”...']

##################################################

Docs with the highest positive value w.r.t the selected topic

--------------------

15

['microsoft365r', 'interface', 'microsoft', '365', 'suite', 'happy', 'announce', 'microsoft365r', 'package', 'working', 'microsoft', '365', 'formerly', 'known', 'office', '365', 'suite', 'cloud', 'services', 'microsoft365r', 'extends', 'interface', 'microsoft', 'graph', 'api', 'provided', 'azuregraph', 'package', 'provide', 'lightweight', 'yet', 'powerful', 'interface', 'sharepoint', 'onedrive', 'support', 'teams', 'outlook', 'soon', 'come', 'microsoft365r', 'available', 'cran', 'install', 'development', 'version', 'github', 'devtools', '::', 'install_github', '("', 'azure', 'microsoft365r', '").', 'authentication', 'first', 'time', 'call', 'one', 'microsoft365r', 'functions', 'see', '),', 'use', 'internet', 'browser', 'authenticate', 'azure', 'active', 'directory', 'aad', '),...']

--------------------

19

['general', 'purpose', 'pendulum', 'pendulums', 'swing', 'one', 'way', 'swing', 'back', 'way', 'oscillate', 'quickly', 'slowly', 'slowly', 'watch', 'earth', 'rotate', 'underneath', 'cliche', 'talk', 'technical', 'trend', 'pendulum', ',”', 'though', 'accurate', 'often', 'enough', 'may', 'watching', 'one', '[…]']

--------------------

14

['teams', 'support', 'microsoft365r', 'hong', 'ooi', 'happy', 'announce', 'version', 'microsoft365r', 'interface', 'microsoft', '365', 'cran', 'version', 'adds', 'support', 'microsoft', 'teams', 'much', 'requested', 'feature', 'access', 'team', 'microsoft', 'teams', 'use', 'get_team', '()', 'function', 'provide', 'team', 'name', 'id', 'also', 'list', 'teams', 'list_teams', '().', 'return', 'objects', 'r6', 'class', 'ms_team', 'methods', 'working', 'channels', 'drives', 'list_teams', '()', 'team']

--------------------

8

['epstein', 'barr', 'cause', 'cause', 'one', 'intriguing', 'news', 'stories', 'new', 'year', 'claimed', 'epstein', 'barr', 'virus', 'ebv', 'cause', 'multiple', 'sclerosis', 'ms', '),', 'suggested', 'antiviral', 'medications', 'vaccinations', 'epstein', 'barr', 'could', 'eliminate', 'ms', 'md', 'epidemiologist', 'think', 'article', 'forces', 'us', 'think', '[…]']

--------------------

18

['recommendations', 'us', 'live', 'household', 'communal', 'device', 'like', 'amazon', 'echo', 'google', 'home', 'hub', 'probably', 'use', 'play', 'music', 'live', 'people', 'may', 'find', 'time', 'spotify', 'pandora', 'algorithm', 'seems', 'know', 'well', 'find', 'songs', 'creeping', '[…]']

--------------------

11

['ai', 'adoption', 'enterprise', '2022', 'december', '2021', 'january', '2022', 'asked', 'recipients', 'data', 'ai', 'newsletters', 'participate', 'annual', 'survey', 'ai', 'adoption', 'particularly', 'interested', 'anything', 'changed', 'since', 'last', 'year', 'companies', 'farther', 'along', 'ai', 'adoption', 'working', 'applications', 'production', 'using', 'tools', 'like', 'automl', 'generate', '[…]']

--------------------

10

['microsoft365r', 'testers', 'wanted', 'hong', 'ooi', 'microsoft365r', 'author', 'updated', 'package', 'github', 'following', 'features', 'add', 'support', 'shared', 'mailboxes', 'get_business_outlook', '().', 'access', 'shared', 'mailbox', 'supply', 'one', 'arguments', 'shared_mbox_id', 'shared_mbox_name', 'shared_mbox_email', 'specifying', 'id', 'displayname', 'email', 'address', 'mailbox', 'respectively', 'add', 'support', 'teams', 'chats', 'including', 'one', 'one', 'group', 'meeting', 'chats', ').', 'use', 'list_chats', '()', 'function', 'list', 'chats', 'participating', 'get_chat', '()`', 'function', 'retrieve', 'specific', 'chat', 'chat', 'object', 'class', 'ms_chat', 'similar', 'methods', 'channel', 'send', 'list', 'retrieve', 'messages', ',...']

--------------------

5

['future', 'security', 'future', 'cybersecurity', 'shaped', 'need', 'companies', 'secure', 'networks', 'data', 'devices', 'identities', 'includes', 'adopting', 'security', 'frameworks', 'like', 'zero', 'trust', 'help', 'companies', 'secure', 'internal', 'information', 'systems', 'data', 'cloud', 'sheer', 'volume', 'new', 'threats', 'today', 'security', 'landscape', 'become', 'complex', '[…]']

import gensim

lda = gensim.models.ldamodel.LdaModel(corpus_tfidf, num_topics=20, id2word = dictionary)

#!pip install pyLDAvis

#import pyLDAvis.gensim as gensimvis

#import pyLDAvis

#vis_en = gensimvis.prepare(lda, corpus_tfidf, dictionary)

#pyLDAvis.display(vis_en)