9.2. Langchain Quickstart Llama3 with Ollama#

This is an adaptation from Langchain Quickstart https://python.langchain.com/docs/get_started/quickstart

What you will learn

Exactly the same as in the previous subsection, however now we run the Llamma 3 LLM and the embedding on the local machine

Apply ollama for running LLM applications locally.

9.2.1. Ollama#

Ollama is an open-source project which allows to easily run LLMs locally. Sota LLMs like Llamma 3, Gemma 2, Mistral or Phi 3 can be applied through Ollama.

Installation: Go to https://ollama.com, download Ollama and follow the in installation instructions.

Download LLM: After the installation process type

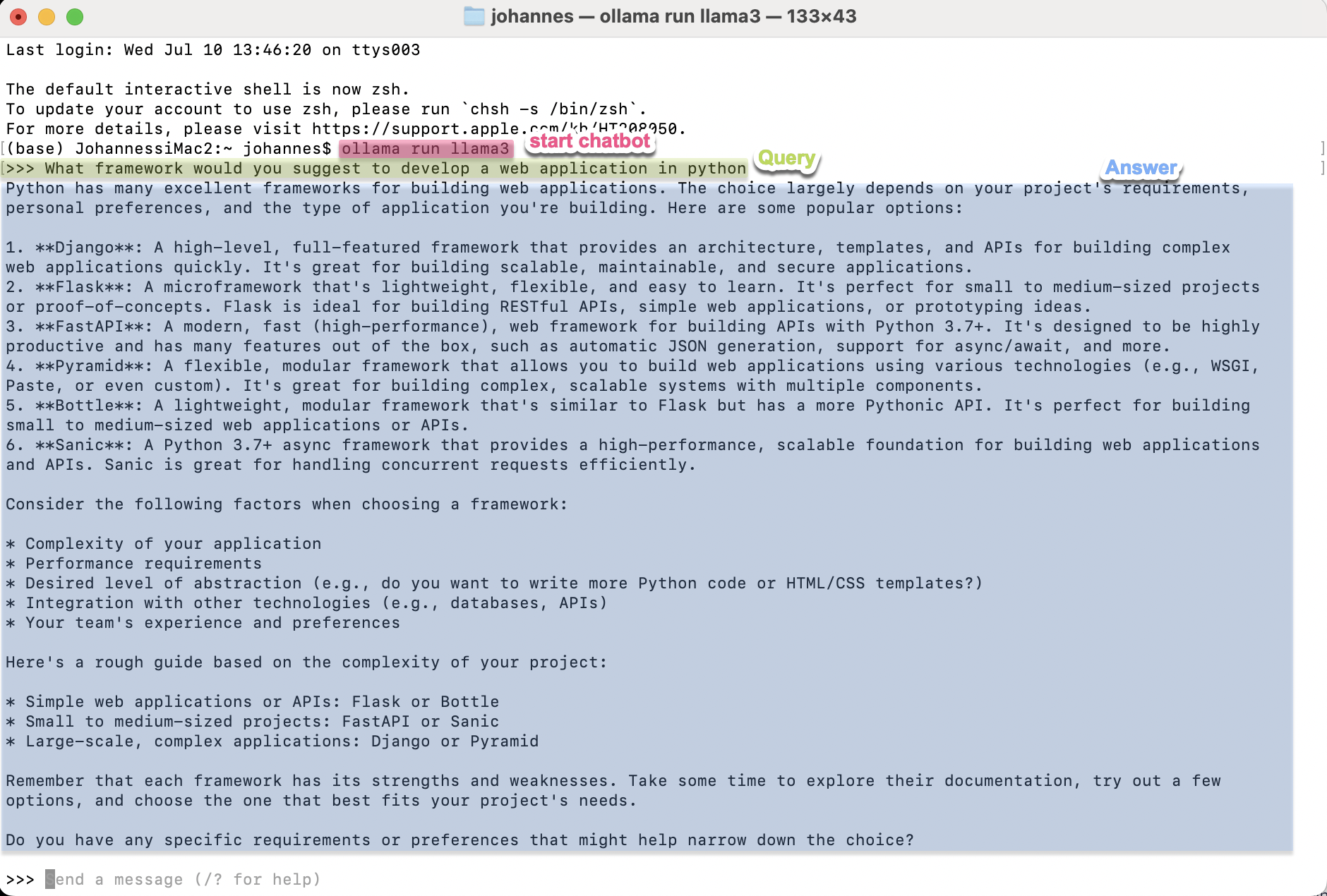

ollama run llama3into your terminal or shell. This will download llama3 to your local disk (if it is not yet there). In the same way you can also download any other LLM, which is provided by Ollama.Query/Chat: The command

ollama run llama3will start the chatbot. Enter your question.

Type /? into the shell in order to get information about all possible Ollama commands.

In this section however, we do not focus on the command-line usage of Ollama, but on how it will be accessed within a Python script or a Jupyter Notebook. This is demonstrated below.

9.2.2. Basic LLM Usage for Question Answering#

See also: https://ollama.com/blog/llama3

from langchain_community.chat_models import ChatOllama

llm = ChatOllama(model="llama3")

llm.invoke("how can langsmith help with testing?")

AIMessage(content="Langsmith, as a language model, can be incredibly helpful in various aspects of software testing. Here are some ways it can assist:\n\n1. **Automated Testing**: Langsmith can generate test cases and test data for automated testing frameworks like Selenium or Pytest. By analyzing the code's syntax and semantics, Langsmith can create relevant test scenarios.\n2. **Error Detection**: With its understanding of natural language, Langsmith can analyze error messages and warnings generated during testing to identify potential issues and provide insights on how to resolve them.\n3. **Test Data Generation**: Langsmith can generate realistic data for testing purposes, such as user input, API responses, or database records. This helps in simulating real-world scenarios and reducing the need for manual data creation.\n4. **API Testing**: By analyzing API documentation and code, Langsmith can generate test cases for API endpoints, including requests, responses, and expected results.\n5. **Code Review**: Langsmith's understanding of programming concepts allows it to review code snippets, identify potential issues or bugs, and provide suggestions for improvement.\n6. **Test Case Writing**: Langsmith can assist in writing test cases by providing a template with placeholders for variables, making it easier to create comprehensive tests.\n7. **Documentation Analysis**: By analyzing software documentation (e.g., README files), Langsmith can extract relevant information about the system's behavior, helping testers write more effective test cases.\n8. **Test Plan Generation**: Langsmith can help generate test plans by identifying key features, scenarios, and edge cases, making it easier to prioritize testing efforts.\n9. **Bug Reporting**: When a bug is encountered during testing, Langsmith can assist in writing a clear and concise bug report, including steps to reproduce the issue and expected results.\n10. **Test Environment Setup**: Langsmith can provide guidance on setting up test environments by analyzing system requirements and dependencies.\n\nWhile Langsmith is not a replacement for human testers, it can certainly augment their efforts by providing valuable insights, automating repetitive tasks, and streamlining the testing process.", response_metadata={'model': 'llama3', 'created_at': '2024-07-17T11:48:15.802808Z', 'message': {'role': 'assistant', 'content': ''}, 'done_reason': 'stop', 'done': True, 'total_duration': 280375046228, 'load_duration': 163034716413, 'prompt_eval_count': 18, 'prompt_eval_duration': 1365111000, 'eval_count': 415, 'eval_duration': 115969886000}, id='run-d6b4e52b-d9b0-4787-93bb-8805e4a45a59-0')

9.2.2.1. Create simple Pipeline#

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

prompt = ChatPromptTemplate.from_messages([

("system", "You are world class technical documentation writer."),

("user", "{input}")

])

output_parser = StrOutputParser()

chain = prompt | llm | output_parser

9.2.2.2. Query#

chain.invoke({"input": "how can langsmith help with testing?"})

"As a Langsmith, I can assist with testing by:\n\n1. **Automating manual testing**: By generating test data and executing it through APIs, I can automate the testing process, reducing manual effort and increasing efficiency.\n2. **Providing test scenarios and cases**: I can help generate test scenarios and cases based on the requirements and specifications, ensuring that the tests are thorough and comprehensive.\n3. **Validating API responses**: I can validate API responses by comparing them with expected results, helping to ensure that APIs are functioning correctly.\n4. **Testing UI interactions**: I can simulate user interactions with a UI, such as clicking buttons or filling out forms, to test how different scenarios affect the application's behavior.\n5. **Detecting bugs and errors**: By analyzing code and generating test cases, I can help identify potential bugs and errors before they become major issues.\n6. **Creating test data sets**: I can generate test data sets that are relevant to the specific testing requirements, ensuring that the tests are realistic and effective.\n7. **Providing insights on test coverage**: By analyzing the test results, I can provide insights on test coverage, helping to identify areas where additional testing is needed.\n\nBy leveraging these capabilities, Langsmith can significantly reduce the time and effort required for testing, allowing developers to focus on building high-quality software faster."

9.2.3. Basic RAG Usage#

In the previous subsection it has been shown, how a LLM can be applied for question-answering. Now, we like to apply Retrieval Augmented Generation (RAG) for question answering. The RAG system integrates a LLM, but in contrast to the previously described basic usage, in RAG more context information is passed to the LLM. The corresponding answer of the LLM then not only depends on the data on which the LLM has been trained on, but also on external knowledge from documents, provided by the user. This external knowledge is passed as context to the LLM, together with the query. The external knowledge, which is used as context, certainly depends on the user’s query. Therefore, the query is first passed to a vector-database, which returns the most relevant documents for the given query. These relevant documents are used as context.

Below we

Collect external documents from the web

Segment these documents into chunks

Calculate an embedding (a vector) for each chunk

Store the chunk-embeddings in a vector DB.

9.2.3.1. Collect Documents for External Database#

from langchain_community.document_loaders import WebBaseLoader

loader = WebBaseLoader("https://docs.smith.langchain.com/user_guide",encoding="utf-8")

docs = loader.load()

USER_AGENT environment variable not set, consider setting it to identify your requests.

len(docs)

1

docs[0]

Document(metadata={'source': 'https://docs.smith.langchain.com/user_guide', 'title': 'LangSmith User Guide | 🦜️🛠️ LangSmith', 'description': 'LangSmith is a platform for LLM application development, monitoring, and testing. In this guide, we’ll highlight the breadth of workflows LangSmith supports and how they fit into each stage of the application development lifecycle. We hope this will inform users how to best utilize this powerful platform or give them something to consider if they’re just starting their journey.', 'language': 'en'}, page_content="\n\n\n\n\nLangSmith User Guide | 🦜️🛠️ LangSmith\n\n\n\n\n\n\n\nSkip to main contentGo to API DocsSearchRegionUSEUGo to AppQuick StartUser GuideTracingEvaluationProduction Monitoring & AutomationsPrompt HubProxyPricingSelf-HostingCookbookThis is outdated documentation for 🦜️🛠️ LangSmith, which is no longer actively maintained.For up-to-date documentation, see the latest version.User GuideOn this pageLangSmith User GuideLangSmith is a platform for LLM application development, monitoring, and testing. In this guide, we’ll highlight the breadth of workflows LangSmith supports and how they fit into each stage of the application development lifecycle. We hope this will inform users how to best utilize this powerful platform or give them something to consider if they’re just starting their journey.Prototyping\u200bPrototyping LLM applications often involves quick experimentation between prompts, model types, retrieval strategy and other parameters.\nThe ability to rapidly understand how the model is performing — and debug where it is failing — is incredibly important for this phase.Debugging\u200bWhen developing new LLM applications, we suggest having LangSmith tracing enabled by default.\nOftentimes, it isn’t necessary to look at every single trace. However, when things go wrong (an unexpected end result, infinite agent loop, slower than expected execution, higher than expected token usage), it’s extremely helpful to debug by looking through the application traces. LangSmith gives clear visibility and debugging information at each step of an LLM sequence, making it much easier to identify and root-cause issues.\nWe provide native rendering of chat messages, functions, and retrieve documents.Initial Test Set\u200bWhile many developers still ship an initial version of their application based on “vibe checks”, we’ve seen an increasing number of engineering teams start to adopt a more test driven approach. LangSmith allows developers to create datasets, which are collections of inputs and reference outputs, and use these to run tests on their LLM applications.\nThese test cases can be uploaded in bulk, created on the fly, or exported from application traces. LangSmith also makes it easy to run custom evaluations (both LLM and heuristic based) to score test results.Comparison View\u200bWhen prototyping different versions of your applications and making changes, it’s important to see whether or not you’ve regressed with respect to your initial test cases.\nOftentimes, changes in the prompt, retrieval strategy, or model choice can have huge implications in responses produced by your application.\nIn order to get a sense for which variant is performing better, it’s useful to be able to view results for different configurations on the same datapoints side-by-side. We’ve invested heavily in a user-friendly comparison view for test runs to track and diagnose regressions in test scores across multiple revisions of your application.Playground\u200bLangSmith provides a playground environment for rapid iteration and experimentation.\nThis allows you to quickly test out different prompts and models. You can open the playground from any prompt or model run in your trace.\nEvery playground run is logged in the system and can be used to create test cases or compare with other runs.Beta Testing\u200bBeta testing allows developers to collect more data on how their LLM applications are performing in real-world scenarios. In this phase, it’s important to develop an understanding for the types of inputs the app is performing well or poorly on and how exactly it’s breaking down in those cases. Both feedback collection and run annotation are critical for this workflow. This will help in curation of test cases that can help track regressions/improvements and development of automatic evaluations.Capturing Feedback\u200bWhen launching your application to an initial set of users, it’s important to gather human feedback on the responses it’s producing. This helps draw attention to the most interesting runs and highlight edge cases that are causing problematic responses. LangSmith allows you to attach feedback scores to logged traces (oftentimes, this is hooked up to a feedback button in your app), then filter on traces that have a specific feedback tag and score. A common workflow is to filter on traces that receive a poor user feedback score, then drill down into problematic points using the detailed trace view.Annotating Traces\u200bLangSmith also supports sending runs to annotation queues, which allow annotators to closely inspect interesting traces and annotate them with respect to different criteria. Annotators can be PMs, engineers, or even subject matter experts. This allows users to catch regressions across important evaluation criteria.Adding Runs to a Dataset\u200bAs your application progresses through the beta testing phase, it's essential to continue collecting data to refine and improve its performance. LangSmith enables you to add runs as examples to datasets (from both the project page and within an annotation queue), expanding your test coverage on real-world scenarios. This is a key benefit in having your logging system and your evaluation/testing system in the same platform.Production\u200bClosely inspecting key data points, growing benchmarking datasets, annotating traces, and drilling down into important data in trace view are workflows you’ll also want to do once your app hits production.However, especially at the production stage, it’s crucial to get a high-level overview of application performance with respect to latency, cost, and feedback scores. This ensures that it's delivering desirable results at scale.Online evaluations and automations allow you to process and score production traces in near real-time.Additionally, threads provide a seamless way to group traces from a single conversation, making it easier to track the performance of your application across multiple turns.Monitoring and A/B Testing\u200bLangSmith provides monitoring charts that allow you to track key metrics over time. You can expand to view metrics for a given period and drill down into a specific data point to get a trace table for that time period — this is especially handy for debugging production issues.LangSmith also allows for tag and metadata grouping, which allows users to mark different versions of their applications with different identifiers and view how they are performing side-by-side within each chart. This is helpful for A/B testing changes in prompt, model, or retrieval strategy.Automations\u200bAutomations are a powerful feature in LangSmith that allow you to perform actions on traces in near real-time. This can be used to automatically score traces, send them to annotation queues, or send them to datasets.To define an automation, simply provide a filter condition, a sampling rate, and an action to perform. Automations are particularly helpful for processing traces at production scale.Threads\u200bMany LLM applications are multi-turn, meaning that they involve a series of interactions between the user and the application. LangSmith provides a threads view that groups traces from a single conversation together, making it easier to track the performance of and annotate your application across multiple turns.Was this page helpful?You can leave detailed feedback on GitHub.PreviousQuick StartNextOverviewPrototypingBeta TestingProductionCommunityDiscordTwitterGitHubDocs CodeLangSmith SDKPythonJS/TSMoreHomepageBlogLangChain Python DocsLangChain JS/TS DocsCopyright © 2024 LangChain, Inc.\n\n\n\n")

9.2.3.2. Chunking, Embedding and Storage in Vector DB#

from langchain_community.embeddings import OllamaEmbeddings

ollama_emb = OllamaEmbeddings(model="llama3")

from langchain_community.vectorstores import FAISS

from langchain_text_splitters import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter()

documents = text_splitter.split_documents(docs)

vector = FAISS.from_documents(documents, ollama_emb)

vector

<langchain_community.vectorstores.faiss.FAISS at 0x1172f2930>

9.2.3.3. Create Prompt#

from langchain.chains.combine_documents import create_stuff_documents_chain

prompt = ChatPromptTemplate.from_template("""Answer the following question based only on the provided context:

<context>

{context}

</context>

Question: {input}""")

document_chain = create_stuff_documents_chain(llm, prompt)

from langchain_core.documents import Document

document_chain.invoke({

"input": "how can langsmith help with testing?",

"context": [Document(page_content="langsmith can let you visualize test results")]

})

'According to the context, LangSmith can let you visualize test results.'

from langchain.chains import create_retrieval_chain

retriever = vector.as_retriever()

retrieval_chain = create_retrieval_chain(retriever, document_chain)

9.2.3.4. Query#

response = retrieval_chain.invoke({"input": "how can langsmith help with testing?"})

print(response["answer"])

According to the provided context, LangSmith can help with testing in the following ways:

1. **Debugging**: LangSmith allows developers to create datasets and run tests on their LLM applications. This enables debugging by looking through application traces.

2. **Test cases**: Langsmith makes it easy to run custom evaluations (both LLM and heuristic-based) to score test results.

3. **Comparison view**: The comparison view allows users to track and diagnose regressions in test scores across multiple revisions of their application.

4. **Playground**: The playground environment enables rapid iteration and experimentation, allowing developers to quickly test out different prompts and models.

5. **Beta testing**: LangSmith supports collecting feedback on how the LLM application is performing in real-world scenarios, helping to develop an understanding of where it's succeeding or failing.

6. **Annotating traces**: The platform allows annotators (PMs, engineers, or subject matter experts) to closely inspect interesting traces and annotate them with respect to different criteria.

7. **Adding runs to a dataset**: LangSmith enables adding runs as examples to datasets, expanding test coverage on real-world scenarios.

8. **Automations**: Automations can be used to automatically score traces, send them to annotation queues, or send them to datasets in near real-time.

These features and functionalities enable developers to create test cases, run tests, and evaluate their LLM applications effectively, ultimately helping with testing and refinement of their applications.